100 Years Later

29/2020

ISBN 978-9985-870-48-8

Issue

The Use of Human Voice and Speech for Development of Language Technologies: the EU and Russian Data-protection Law Perspectives

The global character of research and business related to the language-technology sector requires those producing applications of technology in this domain to comply with relevant regulation – pertaining to intellectual property, personality rights, and data protection – applicable in multiple jurisdictions. The paper reports on research aimed at evaluating and defining conditions for the compatibility of various legal frameworks for the use of voice and speech in development and dissemination of language-technology applications from the EU and the Russian data-protection regulation perspective. The research fills a gap that is of particular relevance, in that the compatibility of Russian data-protection law with the General Data Protection Regulation (GDPR) with regard to the field of language technology has not been explored extensively. The authors draw from prior research to examine the implications in greater depth, with two foci. The first part of the article addresses the legal nature of human voice and speech. In the second part of the paper, the conditions for the development of language technologies are analysed.

Keywords:

personal data protection; language technologies; European Union; Russia

1. Introduction

Language technologies *1 (LTs) have become part of our day-to-day life. Their applications range from services for automatic text translation and spelling- and grammar‑checkers to speech-to-speech translators *2 and applications synthesising the human voice.

The development of LTs does not rely merely on text on a page. It encompasses using the human voice and speech also. Here, ‘voice’ refers to the process of acoustic waves’ creation and ‘speech’ is the process of phoneme creation. *3 In a narrow sense, it is possible to regard the human voice as a tool that is used to create speech (the speech vocalisation element).

The voice and speech are crucial elements of the communication process. Communication by voice is the most convenient and the fastest means of interaction between people and also between humans and computers. It is much easier to input large volumes of data, utilise a control system, and thereby create a dialogue via voice rather than through other methods of communication. *4

Today, more and more products and services are based on LTs that use voice and speech. The practical utilisation of the voice and speech in an LT can be divided into four categories: speech synthesis *5 , voice biometrics *6 , speech analysis *7 , and speech recognition. *8

LTs are seldom focused on one particular country. They are disseminated through multiple jurisdictions. Several of the speech-recognition systems now in use are actively distributed by global digital companies (e.g., the Google Cloud speech API or Yandex SpeechKit), and they can be integrated easily into any program, app, or service, developed nearly anywhere in the world. For example, such speech-recognition systems form the core elements of the following products: virtual ‘voice assistants’ (e.g., Siri *9 , Cortana *10 , Alexa *11 , and Alisa *12 ), Intensive Voice Response (IVR) systems, and vehicular voice-control systems(as used by Tesla, BMW, Ford, and Mercedes–Benz).

To consider the global character of research and business related to LTs, the producers of such technologies need to comply with the relevant regulation, which includes data-protection regulation. The aim for this article is to delineate, evaluate, and compare the legal frameworks for the use of voice and speech in development and dissemination of LTs from the perspectives of EU and Russian data-protection law. Some references to the Estonian legal landscape for data protection *13 are made also, where there is a need to consult the data-protection rules of a specific EU country. Firstly, Estonian law has been chosen since the authors are familiar with it. Secondly, the EU’s data-protection rules leave the Member States considerable flexibility to choose from among various harmonisation and implementation models.

The foundation of data-protection law is the same for Europe and Russia: the European Convention on Human Rights (ECHR) *14 and the Convention for the Protection of Individuals with Regard to Automatic Processing of Personal Data (Convention 108). *15 Since the international framework is limited to the essential principles, it does not extensively harmonise data-protection laws. Therefore, the EU and Russian national laws possess distinctive elements and even conflict with each other in some respects. Such differences in legislation create legal challenges for technology companies that wish to provide their services in Europe and Russia.

The European data-protection framework is established primarily by the General Data Protection Regulation (GDPR) *16 , which is directly applicable *17 in all EU member states. *18 Russian data-protection law relies on the following acts: Federal Law ‘On Personal Data’ *19 , Federal Law ‘On Information, Information Technologies and Information Protection’ *20 , and the ‘Yarovaya package law’ *21 . This list is not exhaustive. There are also legal acts that do not directly refer to the realm of data protection but do contain separate legal rules affecting the data-protection domain (e.g., Federal Law ‘On Communications’ *22 , from 2003). On account of the scope for the research presented here and the complexity of Russia’s data-protection law, these acts are not the main focus of the article.

The choice of jurisdictions for examination here is based on consideration of the fact that the EU and Russia are neighbours and in a globalised world such as ours, it is not possible or even reasonable to avoid co-operation across the jurisdictions in technology development. The authors’ ambition in this regard is limited to addressing co-operation within the framework of LTs, with emphasis on data protection. The research holds further relevance in that extensive comparative analysis of the Russian data-protection laws (significantly amended in 2015 http://www.consultant.ru/document/cons_doc_LAW_165838/ (accessed 10 April 2020)."> *23 and 2017 *24 ) and the General Data Protection Regulation *25 with regard to the LT field has not been undertaken before. *26 The article could also be useful to LT researchers and entrepreneurs who want to cover both the EU and Russia in their studies or products/services. The research results serve as a basis for further investigation pertaining to the personal-data aspects of several jurisdictions’ law.

The authors draw on prior research *27 while relying also on personal experience in the field of legal aspects of LTs. The article broadens the focus of LT-related legal research from that previously established, so as to include Russian data-protection law as well.

The second section of the article addresses the legal nature of human voice and speech from the data protection law perspective. In the third part, the applicability of the EU and Russian data-protection legislation form the LTs perspective is analysed. Under the last section, the principles and rules for voice- and speech-processing are studied.

2. Human voice and speech as personal data

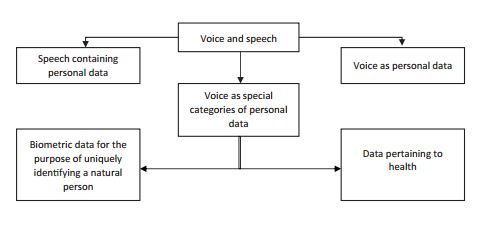

The question of whether human voice and speech should be treated as personal data influences the requirements imposed on development of LTs. Therefore, the authors address particular aspects of the human voice and speech accordingly (see Figure 1). The first of these involves the subject matter of the speech and its content (speech can contain personal data), the second involves the voice as personal data, and the third is related to the question of whether voice belongs to a special category of data that entails additional requirements for its processing (use). The voice is examined without a strong connection to the speech content.

Figure 1: Voice and speech from a data-protection perspective

We begin by considering the facets of speech. Data-protection laws apply if the speech contains personal data. Both European and Russian legal regulations define personal data as information related to an identified or identifiable natural person (the ‘data subject’). *28

The GDPR makes references to various types of personal data (e.g., biometric, genetic, and health data) *29 ; however, the most fundamental line is drawn between the concept of personal data in general and personal data falling in special categories. According to the GDPR, special categories of personal data consist of ‘data revealing racial or ethnic origin, political opinions, religious or philosophical beliefs, or trade union membership, and the processing of genetic data, biometric data for the purpose of uniquely identifying a natural person, data concerning health or data concerning a natural person’s sex life or sexual orientation’. *30 The latter is subject to more stringent requirements. *31

The Russian data-protection regulation, in turn, defines three main categories of personal data: general, special, and biometric personal data. Some of the legal acts specify a fourth category of personal data, ‘publicly available personal data’ *32 . However, Russia’s Federal Law ‘On Personal Data’ does not classify this as a separate and independent category. The ‘special’ category of personal data under these laws includes data pertaining to a person’s racial or ethnic origin, political opinions, religious or philosophical beliefs, health, or sex life *33 . The ‘biometric data’ category covers data related to a person’s physiological and biological characteristics that are used for identification purposes *34 (e.g., fingerprints, DNA, voice, the person’s image, the iris portion of the eyes, and/or body structure *35 ).

The three-category division among general, biometric, and special personal data is of fundamental importance in cases of data-processing. For instance, under the general rule, the processing of special-category data is prohibited *36 , while processing of biometric data may be performed, albeit only with the explicit consent of the data subject *37 . It is important to distinguish data in the special category from the biometric class also because the level of protection required is different *38 .

The information space considered to contain personal data is rather extensive. According to the Article 29 Working Party *39 (WP29), the concept of personal data covers information available in any of various forms (graphical, photographic, acoustic, alphanumeric and so forth) and maintained in storage of numerous types (e.g., on videotape, on paper, or in computer memory). *40

According to Russian law, general-category personal data *41 include such data as the name (surname, patronymic, etc.); the year, month, day, and place of birth; one’s address; the identity of one’s family; social or property status; education, profession, or income *42 ; passport data *43 ; e-mail address *44 ; and information on crossing of state borders *45 .

Another relevant and often misinterpreted issue is the protectability of publicly available personal data. The matter has been settled in EU case law. The European Court of Justice has explained that the use of data collected from documents in the public domain is still processing of personal data. *46 The public availability of personal data has relevance in the context of processing of special categories of personal data, with the rule being that processing of data in the special categories is prohibited. *47 However, this prohibition does not apply if the processing involves personal data that have been manifestly made public by the data subject. *48

The Russian data-protection laws provide that the personal data in question should be considered publicly available if the data subject gives explicit consent *49 for inclusion of the data in the relevant publicly accessible sources *50 . The publicly available data still are subject to the data-protection regulation *51 , but the threshold level of protection is much lower for data in this category than for other categories of personal data. For instance, there is no need to obtain consent for processing *52 or to ensure a confidentiality regime *53 for general- and special-category personal data in this case (consent need only be received once for making the data publicly available). At the same time, the rule explicitly does not extend to publicly available biometric data, whose processing still requires the consent of the data subject.

From a language-technology perspective, it is not so relevant when precisely the data subject’s rights arise. However, when they end is crucial. *54 The GDPR does not apply to personal data of deceased persons. *55 That said, variations may exist in national legislation, creating differences between EU countries in such respects. Therefore, it is important to consult the laws of each specific EU country that is relevant. For instance, under the Estonian Personal Data Protection Act, the protection of rights extends 10 years after the death of the data subject except in cases wherein the data subject died as a minor, for which the term of protection is 20 years. Any heir may give consent for processing. *56 Other Member States may take different approaches.

Russian data-protection regulation extends to the personal data of deceased persons *57 . The processing of such data must comply with data-protection rules (including the requirement to gain consent for the processing). *58 Russia’s data-protection law does not specify a duration for the protection of personal data of deceased persons. One solution is to rely on an analogy to protection of a person’s private life *59 , which is likewise protected after the person’s death. *60 Following this analogy, we could presume that the duration of such protection extends to at least 75 years after the death of the data subject *61 .

The identifiability of a natural person is a critical issue in determination of whether data‑protection laws apply. The authors agree with the WP29 reasoning that ‘a mere hypothetical possibility to single out the individual is not enough to consider the person as “identifiable”’. *62

It is also pointed out in the literature that identifiability depends on the context. Data items not identifying for one person might be identifying for another. *63 It is also suggested that ‘the categorisation of data as identifiable or non-identifiable is a matter of self-assessment by the controller; the controller determines how the data are to be categorised and treated’. *64 This does not, however, mean that the data are in reality non-personal. The controller cannot avoid liability just by considering all the data processed non-personal.

The use of non-personal data is less subject to legal restrictions. *65 Data may be non-personal from day 1 *66 , or personal data may be anonymised and thereby rendered non-personal. With regard to the latter, one should bear in mind that the definition of personal data’s processing is quite broad in the GDPR *67 and Russian law alike *68 . Accordingly, the anonymisation process itself is subject to personal-data protection requirements. Secondly, creating entirely anonymised datasets such that the data do not lose their value is a challenging task. *69 This is especially true for voice and speech.

The next two aspects to be considered pertain to the human voice as such. In scientific literature, the voice is considered biometric data. *70 Both jurisdictions considered here distinguish biometric data from the other categories of personal data. They define said data as data about physical, physiological, or behavioural characteristics of a natural person. *71 Most commonly, LTs use voice and speech as biometric data for two purposes: 1) to identify and verify a person (voice biometrics) and 2) to acquire and analyse new information about a person (voice and speech analysis). *72

From the biometrics perspective, the voice samples (or voiceprints) are used to identify and verify who someone is in a similar manner to DNA, fingerprints, or face recognition. *73 Depending on the operation mode of the biometric system, the voiceprint may be compared with one particular voiceprint to verify the claimed identity (verification mode) or the system may scan a database of voiceprints to find the matching one and thereby establish the speaker’s identity (identification mode). *74 The voice samples (biometric personal data) within voice-biometrics frameworks are often used in combination with other categories of personal data.

From a speech-analysis perspective, voice and speech patterns can be investigated for purposes of obtaining additional information about the person speaking. For example, voice and speech analysis can be used in medical applications *75 for its ability to provide information about stress levels, emotional state *76 , or other health details of the person. In the case of detecting mental state, one’s level of stress, and other medical information, the data received can be considered to be, in addition, information pertaining to the person’s health.

Although the human voice contains biometric information and potentially health-related data, the crucial issue in this regard is whether this means that the voice as such always belongs to a special category of data. The GDPR’s definition of special categories of data *77 refers to two instances of processing wherein the voice can be deemed to belong to special categories: 1) the voice as health data and 2) the voice as biometric data for the identification of a natural person.

If we presume that the voice per se (even without any relevant content) always contains health-related information (which is disputable), then it would be regarded as a special category of personal data both in the EU and per Russian data-protection law. *78 However, a question remains as to what kind of information should be considered health-related and how much of the health-related information can be extracted from the voice.

Russia’s data-protection regulation does not provide a definition addressing precisely what information is connected with information pertaining to health. At the same time, Russian regulation of health protection includes the concept of medical secrecy, under which information about requests for medical assistance, information about health and diagnoses or other information received during medical examinations and treatment constitutes a medical secret. *79 Data with ‘medical secret’ status receive special legal protection, and the processing and disclosure thereof are prohibited, with certain specified exceptions. *80 The concept of medical secrecy is associated primarily with medical assistance requests and provision of medical treatment. The information forming a medical secret is a subset of what is deemed to be personal data having to do with health.

In contrast, European data-protection regulation does define the boundaries of information pertaining to health. *81 According to the GDPR, the information related to health is the personal data that refer to the physical and mental state of the person, along with information about the provision of medical services and related information about health status. *82 Health-related data are subject to special regulation and protection.

In the authors’ opinion, the voice does not always contain health data. Not all television and radio programmes, interview content, etc. should be considered to belong to a special category of personal data. In cases wherein the voice processing is done for collecting data about health , however, it does belong to a special class of personal data, accordingly.

There is no disputing that the voice as such is biometric data. The question is whether this leads to it counting as a special category of data. According to the GDPR, only biometric data used for uniquely identifying a natural person belong to a special category of data. *83 In other words, it is insufficient to deem the voice biometric data without further consideration. Rather, for it to qualify as a special category of data, the voice processing must be done for identification purposes. In this case, the data processing determines its nature. The situation is similar to that of photos depicting people – after all, one’s appearance constitutes biometric data. For the latter case, the GDPR provides the following clarification:

The processing of photographs should not systematically be considered to be processing of special categories of personal data as they are covered by the definition of biometric data only when processed through a specific technical means allowing the unique identification or authentication of a natural person. *84

The authors of this article presume that the foregoing explanation is valid also for the human voice.

Russian data-protection law treats information about physiological and biological characteristics as biometric data only if the operator *85 uses it for purposes of identification *86 . The identification purpose behind the data-processing is the critical criterion for identifying the given personal data as biometric personal data *87 . In a similarity to the EU approach, the voice is not deemed biometric data in the context of data protection unless it is used for identification purposes.

Whether the voice and speech are considered to be personal data plays a crucial role in the processing and in compliance with the data-protection rules. There is commonality between the European and the Russian approach to personal data and the categories thereof in that technology companies are required to treat information such as voiceprints, health information, and other subject data as personal data and to comply with domestic data‑protection regulations on that basis. In the following section, the two regulation systems are analysed and compared. The voice and speech are examined as both non‑sensitive, general personal data and personal data belonging to a special category of personal data (biometric data or data pertaining to health).

3. The applicability of EU and Russian data-protection legislation

The literature emphasises that the right to data protection is a response to technological developments. *88 The ease of accessing huge volumes of data is rapidly increasing apace with cross-border data flows driven by advances in developments of information and communication technologies and a shift toward a digital economy. *89 This forces entrepreneurs to comply with the data-protection laws of all countries where their products and services are offered. For example, the social network LinkedIn was banned and now could not be accessed from the territory of Russia because it was in breach of the Russian data-localisation requirement *90 , discussed below – at that time, there was no LinkedIn Corporation legal entity in Russian territory (e.g., branches or representatives’ offices).

This section addresses the question of when the EU and the Russian data-protection laws are applicable. The applicability of such laws depends on their territorial and material scope. Let us consider European law first. It defines protection of personal data as a fundamental human right *91 , without any limitation based on nationality or residence. *92 The GDPR has extraterritorial character and applies both to entities established in the EU and to entities offering goods and services or monitoring data subjects there. *93 The latter refers to targeting the EU market (i.e., the data subject is within EU territory). *94 The indicator of targeting the EU market is the use of a language or currency of at least one of the EU member states. *95

In practical terms, the extraterritorial effect creates an obligation to comply with the GDPR’s requirements. Entities not established in the EU must designate a representative of their operations targeting EU territory. *96

In contrast, Russian data-protection law does not have extraterritorial effect. It is not applicable to non-residents processing personal data of Russian citizens abroad. There are two exceptions, however. The first involves a ‘data-localisation requirement’, and the second is related to the implementation of the Yarovaya package law.

The localisation requirement for Russian citizens’ personal data was introduced to Russian data-protection law by a federal law dated 27 April 2017 (242-FZ). *97 The amendment added a new obligation for data-processing operators: their collection, storage, and use of personal data of Russian citizens must involve only databases on Russian territory. *98

This rule mandating local handling of Russian citizens’ data must be complied with where the following conditions are met: 1) the information contains personal data; 2) personal data are collected, meaning the data-processing operator having received the data from third parties; 3) the data are processed, or their processing is organised by the operator; and the personal data pertain to Russian citizens. *99

The restriction of Russia’s data protection to Russian citizens creates problems – for instance, how to determine the citizenship of a person who speaks to a voice assistant or how to detect that the voiceprint being processed belongs to a Russian citizen. The Russian data‑protection authority (the Roskomnadzor)attempted to solve the problem by issuing an official opinion. *100 In that opinion, the authority replaced the term ‘citizenship’ with a reference to the territory. According to the opinion, in the event of doubts about the data subject’s citizenship, all information collected and proceeded within the limits of Russian territory must be ‘localised’ to databases located in Russia. *101 Applying this principle solves the problem of identification of citizenship. However, it leaves out Russian citizens’ personal data collected outside Russian territory.

The application of Russia’s data-localisation rule poses a significant hurdle for companies. The above-mentioned LinkedIn case is an excellent example, and it is far from the only one. Recently, the Roskomnadzor initiated review proceedings to determine the level of compliance with the data-localisation rule shown by the Facebook group of companies. *102

The second exception with regard to the nationally bounded character of Russian data‑protection law is found under the Yarovaya package law. This law is not directly connected to data protection, and its material scope differs from that of the Russian federal law ‘On Personal Data’ and of the GDPR. The Yarovaya package law mostly concerns the public sector (public safety and national security). To some extent, it resembles the EU’s Data Protection Police Directive. *103 Since the Yarovaya package law creates new obligations related to the storage and processing of data, its applicability is analysed below.

The Yarovaya package law is a legislation package consisting of two federal laws that introduce amendments to the acts on combating terrorism. The law obliges the providers of telecommunication services and those organising information’s dissemination to store the relevant Internet traffic data (text and voice messages, sounds, photos, videos, and files’ metadata) for six months to three years. *104

The first issue that arises is that of the ‘organiser of information dissemination’ concept. The legal definition provided *105 is too broad and could be taken to refer to virtually every Web page that interacts with a user (e.g., using cookies). Neither does the definition have a national restriction, and it could be considered to cover the Internet giants’ companies, messaging services, blog-hosting platforms and owners of blogs that are hosted on such platforms, the owners and ‘tenants’ of domain names, etc. This legal uncertainty of the definition creates a legal risk for any companies that have a connection with the Russian market that might be covered by the description ‘organiser of information dissemination’. That risk leads to the necessity of complying with the legal provisions cited above.

Compliance of communication service providers and organisers of information dissemination with the requirements of the Yarovaya package law could force companies into breaching other obligations – for instance, under their contracts (confidentiality obligations etc.), national legislation (e.g., the various national acts implemented in transposition of Directive (EU) 2016/680), and the GDPR’s rules. One of the most significant examples of the far‑reaching effects of the Yarovaya package law is the Telegram case *106 , involving blocking of services within Russian territory. *107

A summary of the framework provided above is presented in Table 1.

Table 1: Summary framework

| Application of EU and Russian data-protection legislation | |||

| European data-protection regulation | Russian data-protection regulation | ||

| Sources | • The General Data Protection Regulation (GDPR) | • Federal Law ‘On Personal Data’ • Federal Law ‘On Information, Information Technologies and Information Protection’ •The Yarovaya package law | |

| Extraterritorial effect? | Yes | Only per the data-localisation rule and the Yarovaya package law | |

| Applicability | • EU companies • Non-EU companies with business activities within EU territory (targeting/monitoring activity) | Data-localisation rule | Yarovaya package law |

| • The data subject as a Russian citizen • Processing performed within Russian territory | • The provider of communication services • The organiser of the information dissemination | ||

| Specified connection with citizenship? | No | Yes | Neutral / not addressed –citizenship-agnostic definitions |

One of the primary data-protection problems encountered in the development of language technologies is related to cloud computing and cross-border data flows. For instance, most voice assistants provide their services by means of cloud computing. Speech-recognition systems too are often built in a manner using cloud services, with Yandex SpeechKit being one example. The main problem currently plaguing the organisation of cross‑border data flows between European countries and Russia is legal complication, involving friction among the GDPR, the Russian localisation requirement, and the requirements of the Yarovaya package law. To address the data-localisation rule, the Roskomnadzor published a letter aimed at tackling the problems wrought by that rule with regard to cross-border data flows. According to that letter, data of Russian citizens (or, in cases of any doubts about the citizenship of the data subject, data collected within Russian territory) should be initially collected and stored in databases that are physically on Russian territory, after which the material may be copied and transferred to databases situated in other countries. *108 This leaves several questions, and, at the same time, the legal risk related to rules set forth in the Yarovaya package law are not solved. These various issues could negatively affect further co‑operation between European countries and Russia.

4. The principles and rules for voice- and speech-processing

Since voice and speech are protected as personal data, their use (processing) is subject to several requirements. European and Russian jurisdiction both define data‑processing in a broad manner, such that it covers virtually all activities performed with the given personal data. For instance, European and Russian data-protection regulations alike provide that the processing involves such operations with data as are carried out by either automatic or non-automatic means and involve such activities as collecting, recording, structuring, storing, using, and transmitting. *109

There are usually several parties involved in the processing of data in practice. The Russian and European data-protection scheme differ in how they articulate the identity of the parties performing data-processing activities. Russia’s data-protection regulation defines only one body (the ‘operator’) in this regard that may perform data-processing activities. With its notion of the operator, Russian data-protection legislation refers to the body – defined as a legal person, natural person, or national/local government authority – performing the data’s processing and determining the scope, means, and purposes for data-processing. *110 According to the GDPR, meanwhile, there are two parties involved in data-processing activities (these parties may be represented by a single body): the ‘processor’ and the ‘controller’. The processor is responsible for the technical part of the data-processing and performs the processing on behalf of the data controller. *111 The data controller determines the means and purposes for processing the data. In comparison with the Russian data-protection regulation scheme, the operator is most similar in definition to ‘controller’.

Russian law does not define the processor – the person who technically processes the data. However, under Russia’s data-protection regulatory structure, the operator has a right to delegate the data-processing to a third party. *112 Thereby, the Russian legal approach includes functions of the processor in the legal concept of the third party.

Internationally, the fundamental principles for data-processing are set forth in Article 5 of Convention 108 *113 and reflected in both Article 5 of the GDPR and Article 5 of Russia’s federal law ‘On Personal Data’. According to Article 5 of the convention, the personal data shall be lawfully obtained and processed, *114 fairness is required, *115 processing must be limited in line with the purposes for which the data were stored, the data must be relevant and accurate, and the data shall be kept in a form that permits identifying the data subject for no longer than the purposes for the data’s storage necessitate. *116 These are the fundamental principles that guarantee a certain minimum level of protection in the data-processing. The GDPR complements the list with the accountability principle. This principle for data-processing was developed by the Organisation for Economic Co-operation and Development (OECD). Under it, the data controller too is obliged to comply with the principles mentioned above. *117 Neither Russian data-protection regulation nor Convention 108 highlights the latter principle.

These fundamental principles for data protection lay the groundwork for the rules on data-processing. The rules developed on their basis can be divided into three groups: those regarding lawful, secure, and transparent processing. Both jurisdictions’ rules are discussed in terms of this classification below. Also, voice and speech can be either sensitive data (by virtue of falling into special categories of personal data) or non-sensitive, so the regulatory framework for processing should be investigated with regard to both of these categories as well.

Firstly, the principle of lawfulness of the processing means that the processing should be done in strict compliance with the law and that appropriate legal grounds for such processing must exist.

Under the GDPR, non-sensitive data are lawfully processed if one of the following grounds exists: 1) the data subject’s consent, 2) performance of a contract, 3) compliance with a legal obligation, 4) protection of vital interests, 5) performance of a task carried out in the public interest, and 6) processing for purposes of pursuing legitimate interests. *118

Russian law provides for additional grounds for processing of non-sensitive data. For instance, non-sensitive data may be lawfully processed for purposes of statistics (the Russian data-protection regulations consider this to constitute separate and independent grounds for data-processing) *119 or if the processing is performed to fulfil non-mandatory terms of the law with regard to information disclosure and so forth. *120

In general terms, the GDPR prohibits the processing of special categories of personal data (e.g., biometric and health data). *121 However, there are the following exceptional cases in which processing is allowed: those of 1) explicit consent; 2) fulfilling one’s obligations and exercising specific rights; 3) protection of vital interests of the data subject or of another natural person where the data subject is physically or legally incapable of giving consent; 4) performing legitimate activities with appropriate safeguards by a foundation, association or any other not-for-profit body with a political, philosophical, religious or trade union aim and on condition that the processing relates solely to the members or to former members of the body or to persons who have regular contact with it in connection with its purposes and that the personal data are not disclosed outside that body without the consent of the data subjects; 5) processing related to personal data that are manifestly made public by the data subject; 6) processing necessary for the establishment, exercise, or defence of legal claims; 7) processing necessary for reasons of substantial public interest; 8) processing necessary for purposes of preventive or occupational medicine; 9) what is necessary for the public interest in the sphere of public health; 10) and processing necessary for purposes of archiving in the public interest, for scientific or historical research purposes, or for statistical purposes. *122

Russian data-protection law takes a different approach to sensitive and biometric data, so the rules for processing of voice and speech depend on how relevant the terms related to health or biometric data are. In cases wherein the voice and speech involve health data, the regulation of the data-processing is similar to that under GDPR rules. The general rule is to prohibit processing of this type of data. *123 In contrast, Russia’s data-protection law does not restrict the processing of biometric data as a special category of personal data. Instead, there is a requirement that processing be done only after receipt of the data subject’s consent. *124

For the development of language technologies, the most relevant grounds are the data subject’s consent and legitimate interest.

As for the second group of data-processing rules, referring to security, under the European approach, the implementation of the relevant measures is an obligation of the data processor and controller. The Russian approach presumes that the operator implements these measures. Security measures can be divided into two main groups: technical and organisational measures. Implicit to the European approach is that appropriate security measures should be implemented by design *125 and should be applied by default. *126 The GDPR provides a list of the technical measures that should be applied in the data-processing. *127 For instance, among these measures are pseudonymisation and encryption of the personal data and measures to ensure the confidentiality, integrity, and availability of the data. The security requirements set forth under the GDPR follow the ISO 27001 standard. *128 Organisational measures, in turn, are measures that can be implemented within the company with regard to the employees, other workers, etc. These include provision of information about data-security rules, clarifying these individuals’ responsibilities and duties with regard to data protection. *129 The Russian approach too presumes that data-processing should employ both technical and organisational safeguards for security *130 ;however, the law ‘On Personal Data’ makes only general provisions for required security measures.

The last group of rules, that related to transparency of processing, deals with the data subject’s right to understand the essence of any automated processing of personal data, the main purposes of that processing, and the identity and habitual residence or place of business of the controller of the data-processing. *131

The principles and rules for the data-processing are the basis that should be taken into consideration by those companies conducting business activities in Russian or European territory. Compliance with these data-processing rules demands awareness of the scope of the data subject’s legal rights with regard to data protection. These rights are not absolute, and they need to be balanced with the other fundamental rights, such as freedom of expression, freedom of thought, freedom of expression and of information, religious freedom, and linguistic diversity. *132 The right to linguistic diversity may play an especially significant role in the further development of language technologies and use of voice and speech in their development.

5. Conclusions

With regard to the field of development of LTs, the European and the Russian stance to data protection are quite close in approach but at the same time very far apart. The above analysis of European and Russian legislation shows that these jurisdictions apply similar international legal grounds and follow the same internationally recognised data-protection principles; however, the data-protection regulations are not fully harmonised between the two. For instance, the EU and Russia identify different subjects of data-processing and different scope of obligation for such subjects. Moreover, the EU and the Russian data-protection regulation scheme diverge with regard to the importance of the citizenship of the data subject and differ in the nature of their international application (most importantly, as a general rule, Russia’s data-protection legislation does not have extraterritorial effect).

Examination of the relevant laws showed that voice and speech are considered personal data in both jurisdictions. Therefore, there is a need to follow data-protection laws in this connection.

The human voice can be personal data, or it can belong to special categories of personal data. Which rules are applicable depends on such factors as the type of personal data involved (does voice fall under special categories of personal data?), the form of data storage (is the material anonymised or not?), the place where the data-processing takes place, particular circumstances, and the purpose of the processing. Moreover, in some cases, the applicability of the law depends on the citizenship of the data subject and the territorial focus of the processing activities.

The differences and conflicting legal norms between these jurisdictions create legal obstacles to co-operation extending between the two. The reality is that entities involved with language technologies targeted at both EU and Russian territory must simultaneously comply with the regulation systems of both jurisdictions – which are not compatible with each other. This creates a situation wherein a company needs to choose which regulation has to be breached for the sake of other compliance. Therefore, clear grounds exist for further research and investigation aimed at identifying a possible solution that might solve the problem of conflicting norms.

pp.71-85